下载链接:https://github.com/alibaba/druid/wiki

官网:https://druid.apache.org/ 其实没必要去看

我们直接 SpringBoot 整合

<!-- Druid数据库连接池 -->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.2.8</version>

<!-- 不同版本可能会报错,Springboot2.5.5使用Druid版本 -->

</dependency>设置 数据源类型

# 线上+

spring.datasource.url=jdbc:mysql://127.0.0.1:3306/XXDB?useUnicode=true&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=UTC&useSSL=false&characterEncoding=utf8

spring.datasource.driver-class-name=com.mysql.cj.jdbc.Driver

spring.datasource.username=root

spring.datasource.password=root

#Druid详细配置

spring.datasource.druid.initial-size=5

spring.datasource.druid.max-active=20

spring.datasource.druid.min-idle=5

#spring.datasource.druid.max-wait=60000

spring.datasource.druid.max-wait=10000

spring.datasource.druid.pool-prepared-statements=true

spring.datasource.druid.max-pool-prepared-statement-per-connection-size=20

# spring.datasource.druid.max-open-prepared-statements= #和上面的等价

spring.datasource.druid.validation-query=

spring.datasource.druid.validation-query-timeout=

spring.datasource.druid.test-on-borrow=

spring.datasource.druid.test-on-return=

spring.datasource.druid.test-while-idle=

spring.datasource.druid.time-between-eviction-runs-millis=

spring.datasource.druid.min-evictable-idle-time-millis=

spring.datasource.druid.max-evictable-idle-time-millis=

spring.datasource.druid.filters=stat

# Druid 慢日志 可以用sql: SELECT SLEEP(10)即可获得10秒的慢sql

spring.datasource.druid.filter.stat.log-slow-sql=true

spring.datasource.druid.filter.stat.slow-sql-millis=5000

YML比较老了,忽略吧,我留着的作用仅做对照使用。

spring:

#配置数据库连接信息

datasource:

url: jdbc:mysql://192.168.3.110:3306/govbuy?useUnicode=true&useJDBCCompliantTimezoneShift=true&useLegacyDatetimeCode=false&serverTimezone=UTC&useSSL=false&characterEncoding=utf8

username: ****

password: ****

driver-class-name: com.mysql.jdbc.Driver

# 这样就不会使用Springboot默认的连接池Hikari

type: com.alibaba.druid.pool.DruidDataSource

druid:

initial-size: 5

min-idle: 5

max-active: 20

test-while-idle: true

test-on-borrow: false

test-on-return: false

pool-prepared-statements: true

max-pool-prepared-statement-per-connection-size: 20

max-wait: 60000

time-between-eviction-runs-millis: 60000

min-evictable-idle-time-millis: 30000

filters: stat

async-init: true

# 通过connectProperties属性来打开mergeSql功能;慢SQL记录

connection-properties: druid.stat.mergeSql=true;druid.stat.SlowSqlMills=5000

# 监控后台的配置,如登录账号和密码等

monitor:

#下面内容被配置文件覆盖了 下面不生效

allow: 127.0.0.1

loginUsername: ok

loginPassword: ok添加配置DruidConfig类

import com.alibaba.druid.pool.DruidDataSource;

import com.alibaba.druid.support.http.StatViewServlet;

import com.alibaba.druid.support.http.WebStatFilter;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.boot.web.servlet.FilterRegistrationBean;

import org.springframework.boot.web.servlet.ServletRegistrationBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.sql.DataSource;

import java.util.Arrays;

import java.util.HashMap;

import java.util.Map;

/**

* @author : zanglikun

* @date : 2021/9/25 17:11

* @Version: 1.0

* @Desc : Druid 配置文件

*/

@Configuration

public class DruidConfig {

// 以下druid()方法配置会使在application.yml中后半部分的配置生效

@ConfigurationProperties(prefix = "spring.datasource")

@Bean

public DataSource druidDataSource() {

return new DruidDataSource();

}

// 配置Druid的监控。配置一个管理后台的Servlet

@Bean

public ServletRegistrationBean statViewServlet() {

ServletRegistrationBean bean = new ServletRegistrationBean(new StatViewServlet(), "/druid/*");

Map<String, String> initParams = new HashMap<>();

initParams.put("loginUsername", "admin");

initParams.put("loginPassword", "123456");

initParams.put("allow", "");//默认就是允许所有访问

bean.setInitParameters(initParams);

return bean;

}

// 2、配置一个web监控的filter

@Bean

public FilterRegistrationBean webStatFilter() {

FilterRegistrationBean bean = new FilterRegistrationBean();

bean.setFilter(new WebStatFilter());

Map<String, String> initParams = new HashMap<>();

initParams.put("exclusions", "*.js,*.gif,*.jpg,*.png,*.css,*.ico,/druid/*");

bean.setInitParameters(initParams);

bean.setUrlPatterns(Arrays.asList("/*"));

return bean;

}

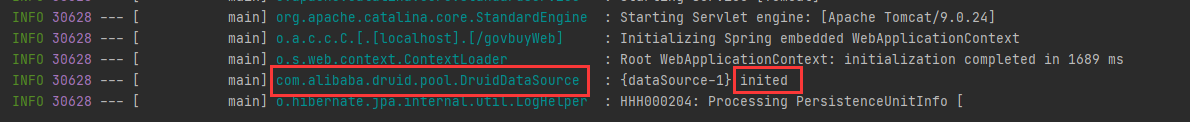

}启动项目

初始化成功!!!

访问我们项目后缀加上 /druid 即可 如:127.0.0.1:8080/druid

如果数据源有这个

解决无效!!!不管他!

Druid中使用log4j2进行日志输出

点击标题 跳到Github 的原文

步骤 简述:pom文件去除原有依赖,添加 log4j2.xml ,配置 application.yml 文件

1、pom.xml 坐标配置

<!--Spring-boot中去掉logback的依赖-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-web</artifactId>

<exclusions>

<exclusion>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-logging</artifactId>

</exclusion>

</exclusions>

</dependency>

<!--日志-->

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-log4j2</artifactId>

</dependency>

<dependency>

<groupId>org.springframework</groupId>

<artifactId>spring-jdbc</artifactId>

</dependency>

<!--数据库连接池-->

<dependency>

<groupId>com.alibaba</groupId>

<artifactId>druid-spring-boot-starter</artifactId>

<version>1.1.6</version>

</dependency>2、配置application.properties

# 配置日志输出

spring.datasource.druid.filter.slf4j.enabled=true

spring.datasource.druid.filter.slf4j.statement-create-after-log-enabled=false

spring.datasource.druid.filter.slf4j.statement-close-after-log-enabled=false

spring.datasource.druid.filter.slf4j.result-set-open-after-log-enabled=false

spring.datasource.druid.filter.slf4j.result-set-close-after-log-enabled=false filter:

slf4j:

enabled: true

statement-create-after-log-enabled: false

statement-close-after-log-enabled: false

result-set-open-after-log-enabled: false

result-set-close-after-log-enabled: false3、添加log4j2.xml文件中的日志配置(完整,可直接拷贝使用)

<?xml version="1.0" encoding="UTF-8"?>

<configuration status="OFF">

<appenders>

<Console name="Console" target="SYSTEM_OUT">

<!--只接受程序中DEBUG级别的日志进行处理-->

<ThresholdFilter level="DEBUG" onMatch="ACCEPT" onMismatch="DENY"/>

<PatternLayout pattern="[%d{HH:mm:ss.SSS}] %-5level %class{36} %L %M - %msg%xEx%n"/>

</Console>

<!--处理DEBUG级别的日志,并把该日志放到logs/debug.log文件中-->

<!--打印出DEBUG级别日志,每次大小超过size,则这size大小的日志会自动存入按年份-月份建立的文件夹下面并进行压缩,作为存档-->

<RollingFile name="RollingFileDebug" fileName="./logs/debug.log"

filePattern="logs/$${date:yyyy-MM}/debug-%d{yyyy-MM-dd}-%i.log.gz">

<Filters>

<ThresholdFilter level="DEBUG"/>

<ThresholdFilter level="INFO" onMatch="DENY" onMismatch="NEUTRAL"/>

</Filters>

<PatternLayout

pattern="[%d{yyyy-MM-dd HH:mm:ss}] %-5level %class{36} %L %M - %msg%xEx%n"/>

<Policies>

<SizeBasedTriggeringPolicy size="500 MB"/>

<TimeBasedTriggeringPolicy/>

</Policies>

</RollingFile>

<!--处理INFO级别的日志,并把该日志放到logs/info.log文件中-->

<RollingFile name="RollingFileInfo" fileName="./logs/info.log"

filePattern="logs/$${date:yyyy-MM}/info-%d{yyyy-MM-dd}-%i.log.gz">

<Filters>

<!--只接受INFO级别的日志,其余的全部拒绝处理-->

<ThresholdFilter level="INFO"/>

<ThresholdFilter level="WARN" onMatch="DENY" onMismatch="NEUTRAL"/>

</Filters>

<PatternLayout

pattern="[%d{yyyy-MM-dd HH:mm:ss}] %-5level %class{36} %L %M - %msg%xEx%n"/>

<Policies>

<SizeBasedTriggeringPolicy size="500 MB"/>

<TimeBasedTriggeringPolicy/>

</Policies>

</RollingFile>

<!--处理WARN级别的日志,并把该日志放到logs/warn.log文件中-->

<RollingFile name="RollingFileWarn" fileName="./logs/warn.log"

filePattern="logs/$${date:yyyy-MM}/warn-%d{yyyy-MM-dd}-%i.log.gz">

<Filters>

<ThresholdFilter level="WARN"/>

<ThresholdFilter level="ERROR" onMatch="DENY" onMismatch="NEUTRAL"/>

</Filters>

<PatternLayout

pattern="[%d{yyyy-MM-dd HH:mm:ss}] %-5level %class{36} %L %M - %msg%xEx%n"/>

<Policies>

<SizeBasedTriggeringPolicy size="500 MB"/>

<TimeBasedTriggeringPolicy/>

</Policies>

</RollingFile>

<!--处理error级别的日志,并把该日志放到logs/error.log文件中-->

<RollingFile name="RollingFileError" fileName="./logs/error.log"

filePattern="logs/$${date:yyyy-MM}/error-%d{yyyy-MM-dd}-%i.log.gz">

<ThresholdFilter level="ERROR"/>

<PatternLayout

pattern="[%d{yyyy-MM-dd HH:mm:ss}] %-5level %class{36} %L %M - %msg%xEx%n"/>

<Policies>

<SizeBasedTriggeringPolicy size="500 MB"/>

<TimeBasedTriggeringPolicy/>

</Policies>

</RollingFile>

<!--druid的日志记录追加器-->

<RollingFile name="druidSqlRollingFile" fileName="./logs/druid-sql.log"

filePattern="logs/$${date:yyyy-MM}/api-%d{yyyy-MM-dd}-%i.log.gz">

<PatternLayout pattern="[%d{yyyy-MM-dd HH:mm:ss}] %-5level %L %M - %msg%xEx%n"/>

<Policies>

<SizeBasedTriggeringPolicy size="500 MB"/>

<TimeBasedTriggeringPolicy/>

</Policies>

</RollingFile>

</appenders>

<loggers>

<root level="DEBUG">

<appender-ref ref="Console"/>

<appender-ref ref="RollingFileInfo"/>

<appender-ref ref="RollingFileWarn"/>

<appender-ref ref="RollingFileError"/>

<appender-ref ref="RollingFileDebug"/>

</root>

<!--记录druid-sql的记录-->

<logger name="druid.sql.Statement" level="debug" additivity="false">

<appender-ref ref="druidSqlRollingFile"/>

</logger>

<logger name="druid.sql.Statement" level="debug" additivity="false">

<appender-ref ref="druidSqlRollingFile"/>

</logger>

<!--log4j2 自带过滤日志-->

<Logger name="org.apache.catalina.startup.DigesterFactory" level="error" />

<Logger name="org.apache.catalina.util.LifecycleBase" level="error" />

<Logger name="org.apache.coyote.http11.Http11NioProtocol" level="warn" />

<logger name="org.apache.sshd.common.util.SecurityUtils" level="warn"/>

<Logger name="org.apache.tomcat.util.net.NioSelectorPool" level="warn" />

<Logger name="org.crsh.plugin" level="warn" />

<logger name="org.crsh.ssh" level="warn"/>

<Logger name="org.eclipse.jetty.util.component.AbstractLifeCycle" level="error" />

<Logger name="org.hibernate.validator.internal.util.Version" level="warn" />

<logger name="org.springframework.boot.actuate.autoconfigure.CrshAutoConfiguration" level="warn"/>

<logger name="org.springframework.boot.actuate.endpoint.jmx" level="warn"/>

<logger name="org.thymeleaf" level="warn"/>

</loggers>

</configuration>自己随便测试:

[2018-02-07 14:15:50] DEBUG 134 statementLog - {conn-10001, pstmt-20000} created. INSERT INTO city ( id,name,state ) VALUES( ?,?,? )

[2018-02-07 14:15:50] DEBUG 134 statementLog - {conn-10001, pstmt-20000} Parameters : [null, b2ffa7bd-6b53-4392-aa39-fdf8e172ddf9, a9eb5f01-f6e6-414a-bde3-865f72097550]

[2018-02-07 14:15:50] DEBUG 134 statementLog - {conn-10001, pstmt-20000} Types : [OTHER, VARCHAR, VARCHAR]

[2018-02-07 14:15:50] DEBUG 134 statementLog - {conn-10001, pstmt-20000} executed. 5.113815 millis. INSERT INTO city ( id,name,state ) VALUES( ?,?,? )

[2018-02-07 14:15:50] DEBUG 134 statementLog - {conn-10001, stmt-20001} executed. 0.874903 millis. SELECT LAST_INSERT_ID()

[2018-02-07 14:15:52] DEBUG 134 statementLog - {conn-10001, stmt-20002, rs-50001} query executed. 0.622665 millis.

完成 去测试看看

特殊说明:

上述文章均是作者实际操作后产出。烦请各位,请勿直接盗用!转载记得标注原文链接:www.zanglikun.com

第三方平台不会及时更新本文最新内容。如果发现本文资料不全,可访问本人的Java博客搜索:标题关键字。以获取最新全部资料 ❤

第三方平台不会及时更新本文最新内容。如果发现本文资料不全,可访问本人的Java博客搜索:标题关键字。以获取最新全部资料 ❤

评论(0)